OpenAI's Paper on Hallucinations

Need not be 'mysterious.' Cheers OpenAI, glad you caught up.

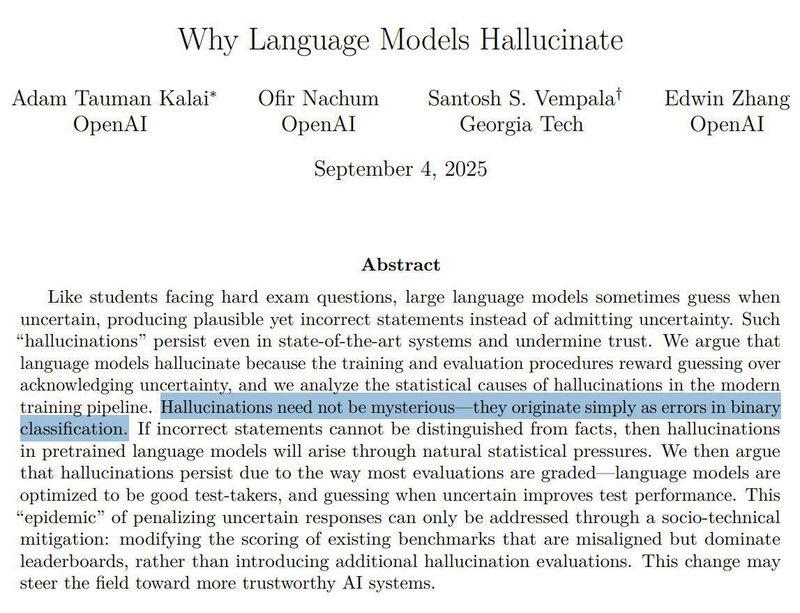

Key Quotes from the Paper

On the core problem of incentives

“Language models hallucinate because the training and evaluation procedures reward guessing over acknowledging uncertainty.”

On the test-taking mindset

“Think about it like a multiple-choice test. If you do not know the answer but take a wild guess, you might get lucky and be right. Leaving it blank guarantees a zero.”

On statistical inevitability

“Hallucinations need not be mysterious—they originate simply as errors in binary classification. If incorrect statements cannot be distinguished from facts, then hallucinations in pretrained language models will arise through natural statistical pressures.”

On the false promises of accuracy

Claim: “To measure hallucinations, we just need a good hallucination eval.”

Finding: “Hallucination evals have been published. However, a good hallucination eval has little effect against hundreds of traditional accuracy-based evals that penalize humility and reward guessing.”

On the possibility of abstention

Claim: “Hallucinations are inevitable.”

Finding: “They are not, because language models can abstain when uncertain.”

On calibration vs accuracy

“Avoiding hallucinations requires a degree of intelligence which is exclusively achievable with larger models.”

Counter: “It can be easier for a small model to know its limits… being ‘calibrated’ requires much less computation than being accurate.”

____________________________

So, OpenAI recently published a new paper after a long silence. After all this time, the groundbreaking insight is… LLMs hallucinate because they were trained that way.

Wow.

“Hallucinations need not be mysterious”? What? Did you pull that wisdom off Altman’s fridge magnet set between “Live Laugh Love” and “Disruption is Inevitable”?

The entire paper itself reeks of ChatGPT writing style, ironically, the overconfident LLM writing about its own overconfidence and hallucination. It just feels lazy, a lot of words for very little. For the company that’s supposed to be the leading force in generative AI, and one that’s been quiet on publishing papers for years, you’d think this would be something big, something like the GPT-IMG1 level. Instead, we got the academic equivalent of the disappointment that was GPT-5.

The main takeaway is basically OpenAI admitting that all LLM makers are deliberately training their models to hallucinate, including the supposedly “ethical, safe, helpful” folks at Anthropic. They’re not stopping any time soon either. Why? Because the most important thing in this world is benchmarks. All those arbitrary benchmarks are the only way these companies show “progress.” Why risk your frontier model looking dumb by admitting it doesn’t know, or waste compute running careful reasoning and tools, when you can just spit out the most likely-looking words instead?

✅ The Verdict

I’m not an expert, but the paper doesn’t actually show that LLMs understand truth or falsehood. How can they “abstain” if they don’t even know whether what they produce is correct in the first place? They have no built-in notion of truth or false. The proposed fix is basically: make them predict when they’re wrong and abstain. But if they can’t reliably detect their own errors, then obviously hallucinations remain. Which is to say: it's a feature, not a bug.

Thanks, OpenAI — or rather, ChatGPT — or maybe ChatGPT writing about ChatGPT — the world’s first self-referential hallucination loop — for the insightful paper. What’s the next one? Big models require lots of energy — water is moist — sky is blue — benchmarks are bullshit —