Thoughts on Google's Veo 3

So, Google’s Veo 3 finally graced the UK with its presence, and like many, I figured, "why not try that 'free' offering?"

First things first, let’s not waste time on the whole ethics charade with these AI "toys". It’s 2025. You either use them or give the companies trying to force them down your throats a big middle finger. I use them occasionally as toys, whilst also doing the latter. The big guns like Google and OpenAI couldn’t give a shit about ethics unless a massive lawsuit or a regulator breathing down their neck forces their hand and even then, it’s just strategic PR ‘apology’ before going right back at it.

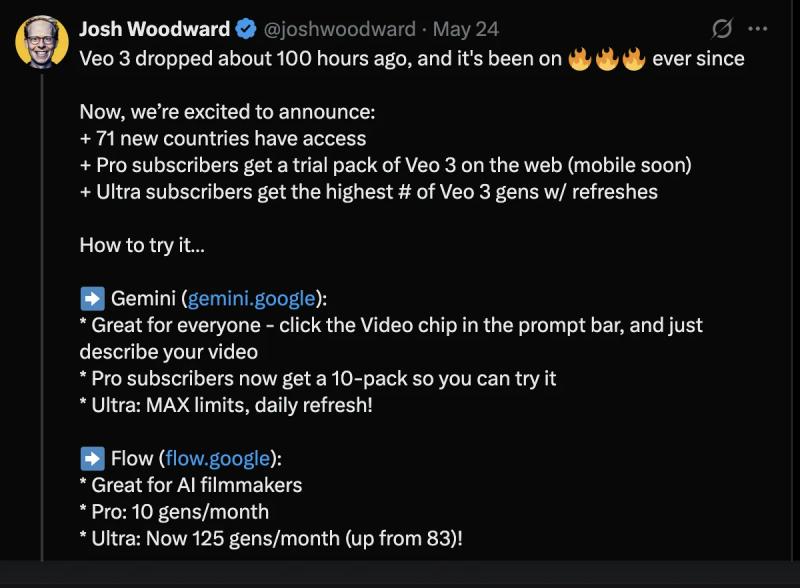

Now, onto Google's "generous" offerings. If you’re a Pro plan user, you get a one-time, non-refreshing, super-exciting trial of 10 Veo 3 videos. YEEE BOI! After that initial burst of magic, you get 1000 credits to use on Flow (Google's version of Sora). Since one Veo 3 clip costs 100 credits, and this refreshes monthly, yes, essentially as a Pro user you get 10 vids a month.

Thinking of forking out £/$250 for the Ultra plan? Well, officially, they said 125 clips a month up from 83, wow!!!! (check pic). Sounds way better, doesn't it? Except, there are plenty of reports from users complaining they get locked out for days after messing around with 2-5 videos. I can’t verify or test this since I don’t have an Ultra sub, but clearly Pro users is getting the scraps here. Yeah, yeah, it’s new cutting edge tech that’s intensively resource hungry in the rolling out phase, but they’re literally Google, there’s no excuses.

So, as I jumped into my generous "free" usage. Honestly, the limitations are a complete joke. Unless it’s simple, you are not getting what you want in one-shot (100 credits in this case). If you’re attempting anything remotely creative, prepare to watch those credits go down the drain (actually, you can’t unless you click on your profile to check, they purposely hid it behind a click). You’ll be sitting there, waiting minutes for each generation, or 4 at once if you’re a high roller, then tweaking, then generating again, all while your precious allowance vanishes into the digital ether.

And the grand dream of making actual long-form content? That usually means you’re just duct-taping a load of 5-to-8-second clips together. Cue the AI hype sheep chorus: 'Game changer! Hollywood is SO done! Imagine what someone can do with this!' The reality? Good luck getting anything consistent or coherent out of that mess. Oh, and don't forget the kicker: every single one of those 8-second clips, especially the ones you delete, also torched a ridiculous amount of energy for absolutely nothing.

By now, your social media is probably a cesspool of generic Veo 3 clips. Endless variations of "OMG, look how realistic!" or the same old tired “hur hur AI don’t know they’re AI” or the same visual cliches etc. Speaking of those "realistic" humans, my money's on that being a direct result of Google having endless terabytes of YouTube content to plunder for training. I mean, Google OWNS YouTube, the planet's biggest video and audio library. It's a data free-for-all for them. This likely also explains why, no matter what some obscure API document might mumble about other aspect ratios for different Veo versions, when you’re actually using Veo 3 in Flow or the web app, you’re stuck in 16:9. I guess even they don’t like Youtube shorts huh?

Anyway, I couldn’t give a toss about generating hyper-realistic humans or the ethical swamp on top of the general problems that comes with them. So back to the vids I tried, keep in mind I only managed a few usable ones.

https://i.postimg.cc/28R8Mx76/Screenshot-2025-05-31-195158.png

This is my friend's cat Jeffrey, I took a picture and tried to img2vid, the "ingredients" option, which I suspects would be a better method required Ultra. The prompts were a simple test: "make sure to only use my cat from this frame, and he looks the same and consistent, he is drinking tea by the seaside, relaxing".

https://youtu.be/0qrRQ93h9hs

As we can see, Jeff does not looks the same, he has become CGI-like and transported to a beach, rather than smoothly transitioned. Another one where I explicitly told it to transition, it didn't.

The second test with Jeff, this time changing the prompts just a little: "make sure my cat looks the same and consistent, he is drinking matcha by the seaside, holding the cup with his paws. relaxing".

https://youtu.be/dy9Rv8OFaRc

Other than the weird sound at the start, this one did follow most of my instructions, but ignoring a major one, the "seaside" part.

https://i.postimg.cc/WbGbvJ57/Screenshot-2025-05-31-195217.png

This is my friend's second cat, stinky Elvis. Again simple prompts, yet it failed several times, adding weirdness I did not asks for: "make sure the cat is the same and consistent, he's munching on an extremely delicious looking roast whole chicken".

https://youtu.be/EbdCNFxW4ZE

For the most parts, this clip was decent, including the sounds it inferred.

Alright, by now I'm low on gens, and img2vid for veo 3 is a mixed bag, hit-or-miss type of thing, which would be fine for genai, if it weren't so limited and resource intensive. You're also stuck to one original frame without Ultra I think.

Next to quickly test the txt2vid: "biblical accurate angel".

https://youtu.be/6iSwqSVJit0

Well, disappointing for sure. Yes, I could have direct more, but I wanted to see what it would infer from a simple prompt, in this case, very poorly.

Next I decided a more detailed set of prompts: "inside a long forgotten alien temple, desolated and shrouded in whispers of its bygone race. the camera tracks forward through a vast chamber where ancient, weightless relics slowly drift and rotate in the air. strange symbols faintly glow on the walls, pulsating irregularly. an unsettling, low frequency thrum fills the air, punctuated by the faint, distant clatter of something unseen in the darkness".

https://youtu.be/V6Emj34yiY0

Now, right away, it followed most of my instructions well, both visuals and the sounds. It did however, ignored the "camera tracks forward & pulsating irregularly" part.

Then I tried a more horror fantasy like: "pov: looking out from a cracked porthole of a sinking, ancient submarine, colossal, bioluminescent leviathan with rows of needle-like teeth and vacant, milky eyes approaches from the oppressive darkness, its whale song a distorted, haunting melody"

https://youtu.be/Dpta4W_ZFow

While pretty creepy, it completely ignored my sounds instructions, the cracked glass became broken instead and the leviathan was more fish like.

My last attempt I tried another submarine, without sounds instructions to let the model infers: "a dark fantasy submarine, carved from bone and obsidian, navigates a black, oily sea under a blood-red sky with two moons, spectral figures with hollow eyes drift through the bulkheads as the captain, a gaunt figure with elongated fingers, consults a map made of human skin".

https://youtu.be/8AJOIy8EYvc

Well, it skipped the first part really quickly, not submerged, while everything else looks decent. The interiors however did not match, the spectrals became spooky skellies instead and the growling from the captain wasn't synced, the other sounds were fine.

As a bonus clip I managed to get both of them with veo 2: "the 2 cats are having a cinematic battle over a deliciously roasted whole chicken".

https://youtu.be/6V70lcTpBkE

✅ The Verdict

So, what's my final word on Veo 3? It's certainly better than Sora in most aspects, as it should damn well be, Sora's several months old now, on top of the version we're getting is the watered-down, optimised for speed & cost Turbo ver (I'd take the unlimited fast gens any day over veo, as a toy). Look, I certainly would like to play with it some more, it’s definitely fun, in that dumb, distracting way. But let's be brutally honest: the limitations are pretty clear from just the tests I had. Like every other genai toys, you’re still at the absolute mercy of the model’s 'temperature', no matter how god-tier you think your 'prompting skills' have become.

Using this for actual, serious content creation? It certainly has some niche uses, like idealising or visualising. But you better also be ready for some heavy post-editing and processing. And that's after you’ve burned through a ridiculous pile of credits and time promptings.

Using it purely as a toy? Then you’re just torching a scandalous amount of energy for a few forgettable seconds of stupid, pointless shit that’ll just have you mashing that ‘generate’ button again anyway, like a digital lab rat hitting a lever.

Or, of course, you could join the ranks of those churning out endless, shitty, generic 'realistic' Veo 3 clips, singing its praises to the utterly clueless masses. It's just sheep leading other sheep straight off a cliff.